618盘点|NFC防伪标签和NFC智能手表标签成年中备

618盘点|NFC防伪标签和NFC智能手表标签成年中备

2022-06-23

一年一度的618年中备货季步入尾声,相信大家在多轮促销中都已经买到了自已心仪的商品。今天来随小编盘点下2022年618期间NFC标签的热门应用有哪些吧!...

NFC标签/卡如何选型?NFC的常见应用有哪些?

NFC标签/卡如何选型?NFC的常见应用有哪些?

2022-05-07

NFC标签、NFC卡在实际应用中要如何选型?相信不少新客户在购买前都会纠结这个问题,NFC作为一种近年颇受欢迎的近场通信技术,其应用十分广泛。NFC芯片标签/卡因其可被手机NFC碰一碰...

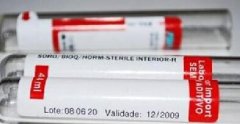

有了RFID电子标签,为什么药品标签还这么复杂?

有了RFID电子标签,为什么药品标签还这么复杂?

2022-05-05

大连杨金数据挖掘科技有限公司主要生产各种RFID电子标签,动物标签,图书防伪标签,资产管理标签,洗涤标签,NFC标签,rfid洗涤档案柜危化品管理,rfid读写设备,智能卡等,现有多条rfid标签和智...

RFID托盘标签在物流管理中的应用优势有哪些?

RFID托盘标签在物流管理中的应用优势有哪些?

2022-03-21

近年来全球市场竞争激烈,越来越多的企业通过整合产品的供应链,采用“按订单生产”,“零库存管理”,“多批次少批量”等业务来协调供应链的各个环节,从而确保快速、及时和...

RFID图书管理系统和RFID图书标签能为图书馆带来哪

RFID图书管理系统和RFID图书标签能为图书馆带来哪

2022-02-15

RFID图书管理系统是将RFID技术应用于图书馆领域管理,以帮助图书馆实现读者自助借阅、 24小时读者自助还书、快速馆藏资料清点、图书自动排架、顺架、更加防盗等功能。那么RFID图书...

RFID技术在智能交通中发挥的重要作用及分类

RFID技术在智能交通中发挥的重要作用及分类

2022-02-14

大连杨金数据挖掘科技有限公司主要生产各种RFID电子标签,动物标签,图书防伪标签,资产管理标签,洗涤标签,NFC标签,rfid洗涤档案柜危化品管理,rfid读写设备,智能卡等,现有多条rfid标签和智...